The brief.

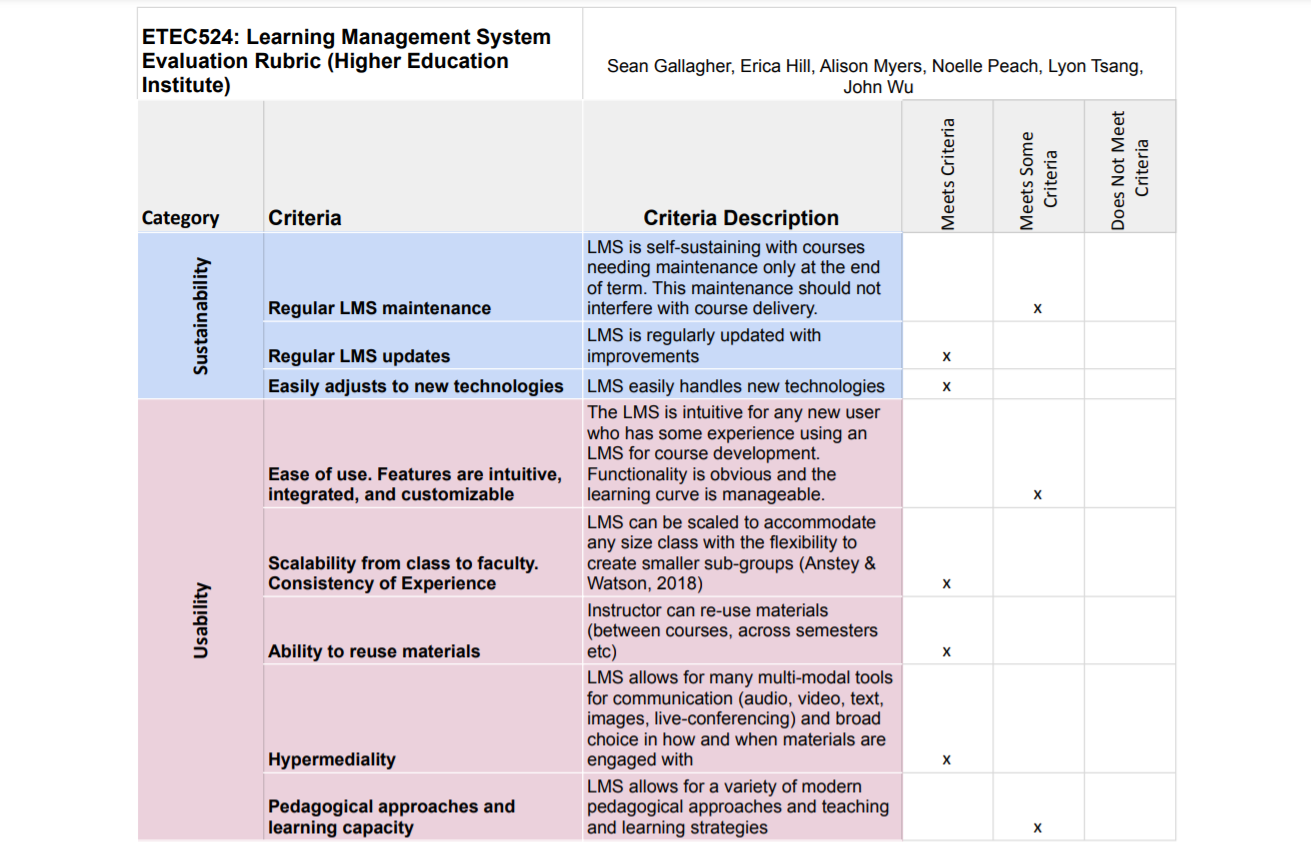

In this assignment, we were asked to develop an evaluation rubric for selecting a learning environment for your given context in groups. The group’s context was higher education, particularly a larger school with challenging demands on learning environments. The assignment included a summary of our group’s scenario, our detailed evaluation rubric, organized by categories, and one paragraph describing why we included what we included, based on our course literature.

The takeaways.

The 'Learning Environment Evaluation Rubric Assignment' was an informative experience in simulating how to decide on what criteria an institution might consider when evaluating a new learning management system (LMS) or content management platform (CMS). We started by thinking of a scenario in higher education where a new LMS would be brought on as an instructional aid. We chose to consider a large institution like UBC whose contract with the incumbent LMS provider was coming due, and focused particularly on the during and post Covid-19 context with heavier reliance on the LMS as more students are studying from home and internationally.

Our rubric criteria were adapted in part from the MIT CITE and IIM framework (Osterweil et al., 2015) , and the Anstey & Watson (2018) rubric for evaluating e-tools in higher education. We concentrated on concerns larger institutions might have and created a very detailed rubric for our evaluation process. The full rubric was then condensed down to a checklist format used to directly compare each LMS in our pool of selections. Had we been able to use a few more words, or find a slightly different structure for our report, I would have liked to have included more focus on the learning and pedagogy afforded by the LMS.

What I liked especially about the MIT CITE and IIM framework (Osterweil et al., 2015) , and the Anstey & Watson (2018) rubric for evaluating e-tools in higher education, was their respective focus on learning experiences like metacognition and teaching pedagogies. Our rubric was directed more toward logistics like cost, privacy and security, and data and reporting. First, I fully recognize the importance of thinking through the logistics of each LMS. However, something I wanted to think more about was the actual pedagogical affordances of an LMS. I was also keen to consider accessibility - not in the international student or device sense - but in allowing differently abled students to participate with equity on the LMS. I would have liked a bit more preparation in thinking about learning environments this way, and a bit more space for reaching beyond the logistics of the LMS.

On the other hand, I learned a tremendous amount during the simulation. I enjoyed considering what a university will need moving forward past the pandemic. In my own institution, I was responsible for moving our course online. Using Moodle, I was anxious to find a way to maintain the integrity and spirit in the course online only to discover we didn't have many of the possible plugins enabled that instructors could use to engage students. Most importantly, I had wanted to use H5P for interactive content but it wasn't available as a front-end plugin for instructors (Moodle, n.d.). I think considering how to continue using learning environments like LMS or CMS more holistically post-pandemic to support instruction was an important exercise.

Finally, it was a pleasure to do some groupwork in this assignment and I think that in itself was a small simulation of the way in which a committee might go about making a decision when considering which LMS or CMS to implement.

References

Anstey, L., Watson, G. (2018). A rubric for evaluating E-Learning Tools in Higher Education. Educause. Retrieved June 3, 2021, from https://er.educause.edu/articles/2018/9/a-rubric-for-evaluating-e-learning-tools-in-higher-education

Moodle plugins directory: Interactive Content – H5P. (n.d.). Moodle.org. Retrieved June 6, 2021, from https://moodle.org/plugins/mod_hvp

Osterweil, S., Shah, P., Allen, S., Groff, J., & Sai Kodidala, P., & Schoenfeld, I. (2015). Summary report: A framework for evaluating appropriateness of educational technology use in global development programs. The Massachusetts Institute of Technology, Cambridge, Massachusetts & The Indian Institute of Management, Ahmedabad, India. Retrieved from https://dspace.mit.edu/bitstream/handle/1721.1/115340/Summary%20Report_A%20Framework%20for%20Evaluating%20Appropriateness%20of%20Educational%20Technology%20Use%20in%20Global%20Development%20Programs.pdf?sequence=2&isAllowed=y